In mid to late November, I noticed traffic to one of my e-commerce sites dropped dramatically. Some quick research in Google Analytics turned up that our Google organic traffic dropped off significantly. This came as a huge shock to us because we were cruising along really, really well for some important key terms that drive quality traffic to our sites. This equates to lost dollars since we aren’t getting the visits that could turn into conversions.

After some research and conversations with other web developers I know, I came to the conclusion that nothing I had really done to our site would have caused the drop off in traffic. What’s worse is that we seemed to have dropped out of the Top 100 for key terms that we had previously ranked high in the Top 10 for. Really. Big. Problem.

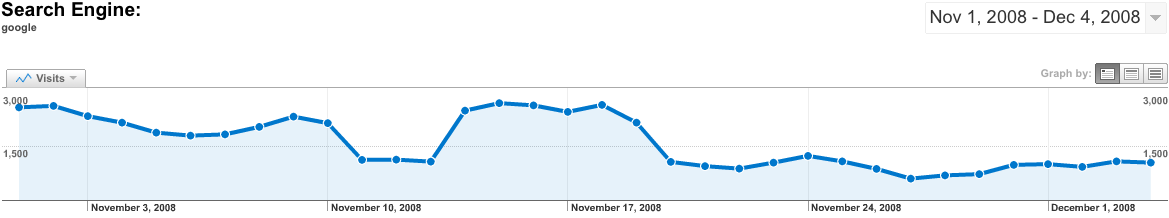

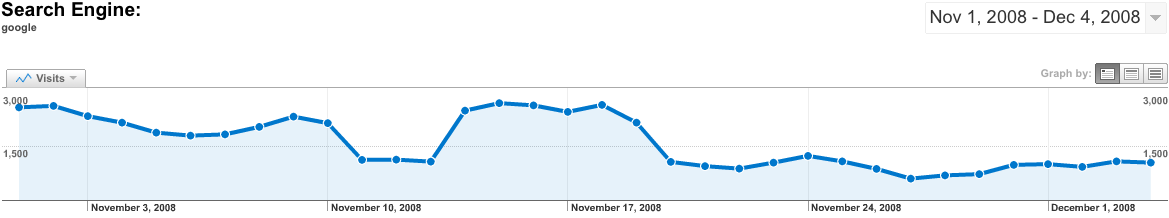

So what happened? My guess is Google tweaked up their search algorithm a little. My reasoning came from the Google tools that I use on a daily basis to manage my sites. Take a look first at the image below. What it shows is our Google organic traffic from November 1, 2008 through December 4, 2008.

What you can see here is that we were flying at something around 1,800 to 2,300 visits a day. Good stuff. Then around November 11, things dropped down sharply. I kind of panicked here, but didn’t change the site up any. Then on the 14th, we were back up again. Way up. For the next 5 days, we were up around 2,600 visits a day, even peaking over 2,700 one day. Then on the 15th, things fell apart again. We were down slightly. Ok, only one day. Don’t panic.

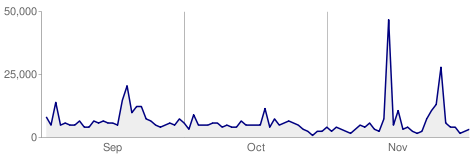

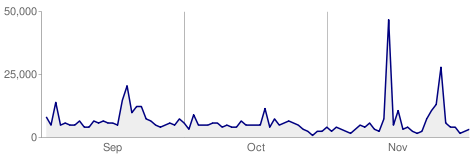

Then I did panic. On November 20th, we were down to just over 1,000 visits. Ouch. Over the next 2 weeks, we went as low as around 850 visits. This was a problem. What happened? I didn’t change up the site in a way that should have affected our rankings from all the research I had done on my own and with other developers. So what gives? Take a look at the image below, which shows Google crawl stats

See the huge spike around the second week in November? That’s about when things started to hit the proverbial fan. This spike looks like a deep crawl of our site by Google. I’ve never seen Google crawl our site this much all at one point. To me, this indicates changes were afoot over at Google. What changes, who knows. Only Google does. What I do hope is that our site comes back up in the rankings as its a well built site, adhering to Google’s guide for building quality sites.

The bright spot here is that we’re starting to come back for some of our key terms and our organic traffic from Google is looking better. At the same time, our traffic from MSN and Yahoo has continued to get better, so this is another indication that Google changed something up. Also, using Google Webmaster Tools and Google Analytics can provide you with great insight into what Google sees of your web site as well as how people find and use your site. I highly recommend using both if you don’t already.